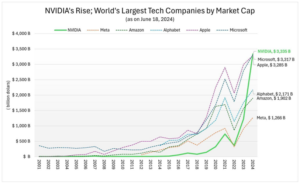

On June 18, microchip maker Nvidia became the world’s most valuable company, marking a meteoric rise, considering it was not even in the top 20 a few years ago. In just two years, it has surpassed the market capitalisation of Big 5 Tech companies – Microsoft, Apple, Amazon, Alphabet, and Meta. This rapid ascent is unprecedented in corporate history. In the speculative stock market, company valuations fluctuate constantly. At the time of writing, Apple and Microsoft had again surpassed Nvidia. Nonetheless, Nvidia’s rise to the ranks of the world’s most valuable corporations remains remarkable. The primary driver behind this ascent is the recent advances in generative AI. Nvidia is a leading manufacturer of specialised chips that provide the computing power essential for these technologies.

In recent years, technology companies have dominated the list of the world’s most valuable companies. Only a few companies from other sectors, such as Saudi Aramco and Berkshire Hathaway, are in this list. AI and related technologies are widely expected to have the greatest impact on human life and the economy in the near future. As a result, the world’s largest technology companies and many startups are vying for a competitive edge in these technologies. Governments are also racing to adopt this cutting-edge technology, piquing the interest of speculative finance capital in this sector.

READ I India-UK FTA: Public health must get priority in trade talks

Why Nvidia

AI is commonly associated with software companies that create complex algorithms. The critical role that chipmakers like Nvidia play in AI development is not immediately obvious. Brilliant programmers and deep knowledge of computer science and related disciplines are insufficient to propel this industry forward; massive infrastructure and significant capital investment are also required.

AI models built with artificial neural networks and other generative AI technologies rely heavily on the continuous analysis of large data sets. The computing power required for this is immense. These computations are performed on GPUs (Graphics Processing Units) from companies such as Nvidia, which are more suitable than the traditional CPU (Central Processing Unit) chips found in standard computers.

Until recently, Nvidia was known primarily as a manufacturer of GPUs for graphics-intensive devices like gaming consoles. GPUs use a technique known as accelerated computing to execute repetitive arithmetic operations in parallel without interfering with other CPU tasks.

Jensen Huang, a Taiwanese-American electrical engineer with extensive chip manufacturing experience, co-founded Nvidia in April 1993 with Chris Malachowsky and Curtis Priem. By 2006, Nvidia had established itself as the market leader in GPUs and introduced a parallel computing platform and programming model based on the chips’ built-in parallel compute engine. Nvidia’s true fortune came when AI researchers realised that GPUs could provide the massive computing power required by machine learning technologies such as neural networks more efficiently. As demand for such expensive computing platforms grew, so did Nvidia’s profits.

According to Forbes, the cost of the GPUs required to train OpenAI’s GPT-3 language model alone is around $5 million. ChatGPT used 30,000 GPUs to answer the daily queries of billions of users in January 2023. This illustrates the importance of GPUs for the current generation of AI. Nvidia’s Q1 2025 financial results reflect the unwavering demand for AI technologies, with quarterly revenue rising to $26 billion, up 262% from a year ago. Data center revenue, which includes GPUs used to train generative AI, was $22.6 billion, while gaming, the company’s traditional business, accounted for only 10% of total revenue.

Rise of oligopolies

Nvidia’s success story highlights some fundamental characteristics of the rapidly expanding AI industry that raise serious concerns. While internet technologies initially promised freedom and decentralisation, they quickly gave way to oligopolies. However, new AI technologies exhibit tendencies towards centralisation and monopolisation from the start. Large technology companies and global finance capital are likely to dominate these technologies, which require massive investment.

The cost of purchasing high-performance computing platforms, such as Nvidia’s, is just a fraction of the investment. These platforms also require significant electrical energy to operate. According to one study, generating an image with an AI model uses enough electricity to fully charge a smartphone. Google’s new AI-powered search engine consumes ten times more power per search than traditional Google searches, roughly equivalent to the amount of electricity consumed by talking on the phone for an hour. According to the International Energy Agency, electricity consumption from data centers, AI, and cryptocurrency will double by 2026 compared to 2022 levels. Meeting the massive energy demands of new technologies requires highly centralised and capital-intensive systems.

Only large corporations and ventures with significant financial resources will be able to thrive in this industry. The question is whether such an industry will be able to protect society’s larger interests as new technology fundamentally transforms society. Growing concerns include the lack of infrastructure driving experts in this field away from universities and into private industry, stifling independent research.

The Washington Post recently published an article about Fei-Fei Li, a leading AI computer scientist, who called for funding to provide national infrastructure—computing power and data sets—for university researchers to conduct AI research on par with private companies. The report mentioned interesting statistics: while Meta intends to acquire 350,000 GPUs for AI development, Stanford’s Natural Language Processing group has only 68 GPUs to meet its needs.

Chips and globalisation

Chips have become essential components in a variety of technologies, including household appliances, computers, mobile phones, communication systems, military weapons, and satellites. At the same time, the chip industry has a highly complex production cycle, supported by a global supply chain vulnerable to wars, pandemics, and other regional or global turmoil. Most new chips are designed in the United States but manufactured and packaged in East Asian countries such as Taiwan, with necessary equipment made in other countries, including Europe.

Nvidia’s rise is just one facet of the fierce competition for more computing power. Today, superiority in advanced technologies is a key determinant of global economic and political power. As a result, developing computing infrastructure has become a top priority for governments. This competition has permeated the global economy, trade, and power dynamics among nations. The complex global supply chain exacerbates and complicates these competitions. Chris Miller, an economic historian and author of “Chip War: The Fight for the World’s Most Critical Technology,” contends that microchips are now as influential in globalisation and politics as petroleum once was.

The US-China tech war

The globalisation of chip manufacturing has become a key issue in the growing rivalry between the United States and China. China, which aspires to be a superpower in modern technologies such as 5G, AI, and quantum computing, considers self-sufficiency in chip manufacturing to be an important goal. Until a few years ago, developed capitalist countries saw Communist Party-ruled China as a sweatshop for cheap labor. That is no longer the case. China has emerged as a major economic power, challenging American dominance.

The Chinese government has launched deliberate efforts to achieve technological self-sufficiency. Its ‘Made in China 2025’ plan, adopted in 2015, envisions the country becoming the world’s leading manufacturing hub. These actions, according to the US, pose a significant threat to the unipolar world order it currently leads. The trade war with China, which began a few years ago with tariffs on Chinese imports, quickly escalated into a technology war. Fears of China becoming a military and economic power, the escalating Sino-Taiwan conflict, and supply chain disruptions caused by the COVID-19 lockdown have all contributed to Washington’s concerns.

The US administration regards microelectronics, including chips, as critical technology in countering China’s rise. Maintaining dominance in this industry is a common theme at the highest levels of the US government. For example, the CHIPS and Science Act, signed by President Joe Biden on August 9, 2022, seeks to restore US leadership in the semiconductor industry, reduce reliance on foreign-made chips, and defend against China. The act allocates $52.7 billion to US semiconductor research, development, manufacturing, and workforce development.

Limiting technology exports to China is one of the primary strategies employed by the US government in the US-China technology war. One goal is to prevent powerful AI chips like Nvidia’s from reaching China. These restrictions, which began in 2018, aim to limit China’s access to technology areas that pose a threat to US economic and global political interests. They include adding Chinese companies to the Bureau of Industry and Security’s “Entity List,” subjecting them to export restrictions and licensing requirements for specific technologies and goods.

On October 7, 2022, new restrictions were imposed to limit China’s ability to develop advanced semiconductor technologies, escalating these sanctions. One primary goal was to impede China’s military modernisation efforts. A year later, the rules became more stringent. The Biden administration is implementing plans to prevent new American investment in critical Chinese technology industries that could be used to strengthen China’s military power.

In short, both regimes are attempting to reduce interdependence while increasing strategic self-sufficiency. It is impossible to predict where this process, known as decoupling, will lead. It is impossible to completely reverse economic globalisation. However, the changes brought about by the ongoing tech war will be far-reaching, altering the power dynamics of the global economy and politics. As new technologies rely heavily on computing power, chips have become a metaphor for the engine driving the new world order.

Ajith Balakrishnan is an engineer, information technology expert, and social observer. He writes about technology, economy, environment, society, and politics.